It also includes features like task dependencies, data lineage monitoring, and warnings to ensure data processing processes run smoothly and without problems. Airflow's web-based user interface allows users to drag and drop workflows to create, manage, and monitor them. Ultimately, the processed data is placed into a data warehouse for further analysis and reporting, such as Amazon Redshift, Google BigQuery, or Snowflake.īig data orchestration solutions like Apache Airflow can be used in such circumstances to automate and schedule various data processing activities. After that, the data is processed using various technologies such as Apache Spark, Apache Hadoop, and Apache Flink. Data ingestion from multiple sources, including weblogs, transactional databases, and social media platforms, is the first step in the data pipelines. The data processing pipelines of e-commerce enterprises are a common example of big data orchestration.

Big data orchestration aids in the management and scheduling of these tools and technologies, ensuring that data processing operations run smoothly. It necessitates integrating and orchestrating various tools and technologies for data to be processed efficiently and on time. It is an important part of big data management since it helps to streamline the data acquisition, processing, and distribution processes.īig data orchestration is required because big data processing is complex and time-consuming. Let us now learn about Big data orchestration before learning about: "Big data orchestration using Apache Airflow".īig data orchestration automates, schedules, and monitors the data operations required for big data processing. Additionally, Airflow integrates well with popular big data technologies such as Apache Hadoop, Apache Spark, and Apache Kafka. With Airflow, users can also easily visualize dependencies among tasks, allowing for greater clarity and control over their data pipelines. In addition, Airflow provides a rich set of pre-built operators to interact with various data sources and sinks, including databases, cloud services, and file systems.Īirflow's user-friendly web interface allows users to monitor the status of running workflows, set up alerts, and retry failed tasks. Workflows are defined using Python code, which makes it easy to modify, maintain and test them. Apache Airflow is an open-source platform that helps manage and orchestrate complex workflows in big data processing pipelines.Īirflow lets users define, schedule, and monitor workflows programmatically and efficiently. This is where big data orchestration comes into play. Managing these workflows and dependencies can become a cumbersome task. Big data processing involves complex workflows and multiple stages, such as data ingestion, processing, and analysis. "Big data orchestration using Apache Airflow", let us understand big data processing and its needs. Introductionīefore learning about our topic i.e. It allows users to organize and execute jobs and workflows effortlessly depending on variables like time, data availability, and external triggers.

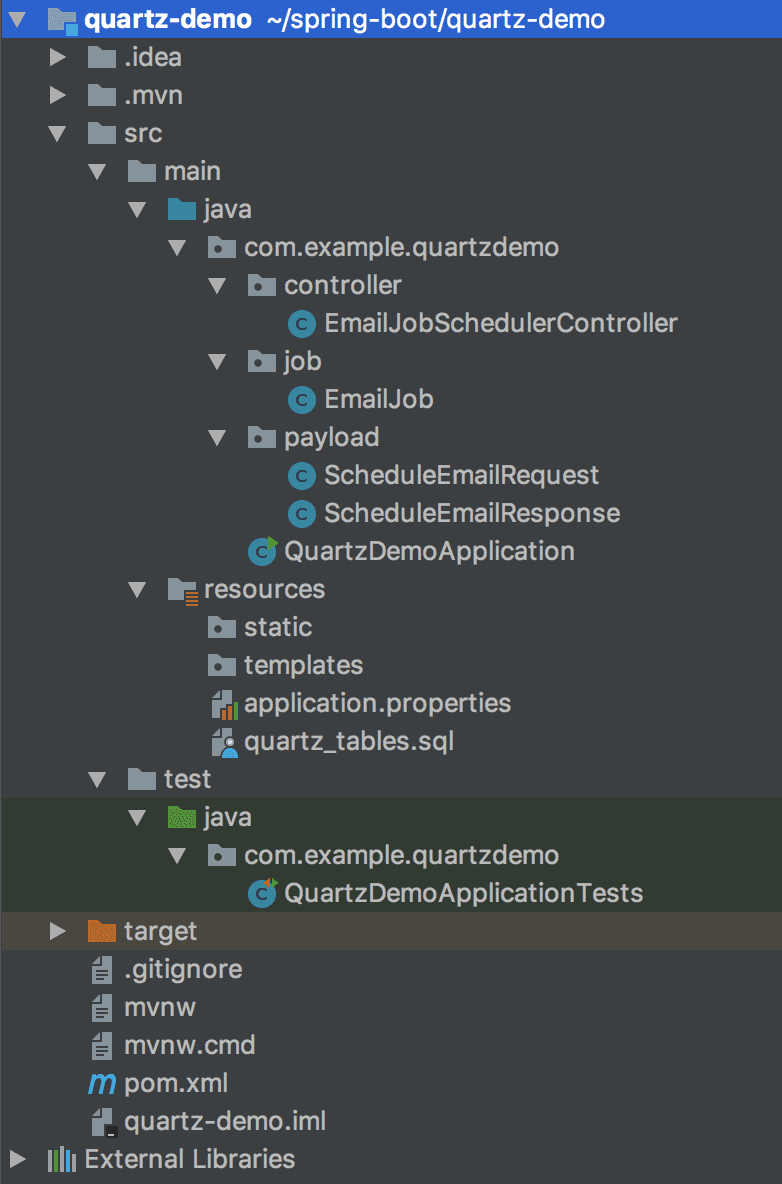

It can be integrated with various big data technologies such as Apache Hadoop, Spark, and Hive. Airflow provides an easy-to-use interface for creating, visualizing, and editing workflows. In addition, users may manage task dependencies, monitor progress, and automate error handling with Airflow. Data engineers can use it to create and orchestrate complicated data pipelines for large data processing. Then we’ll need to enable auto-configuration and give Spring the data source needed by the Quartz scheduler.Apache Airflow is an open-source platform for programmatically creating, scheduling, and monitoring processes.

First, we’ll set the store type in our application.properties: -store-type=jdbc Setting up a JDBC JobStore in Spring takes a few steps.

#Airflow scheduler versus quartz scheduler driver#

There are driver classes for most databases, but StdJDBCDelegate covers most cases: =.JobStoreTX At a minimum, we must specify the type of JDBCJobStore, the data source, and the database driver class. There are several properties to set for a JDBCJobStore.

0 kommentar(er)

0 kommentar(er)